PSI/J

Install

Documentation

Resources

About

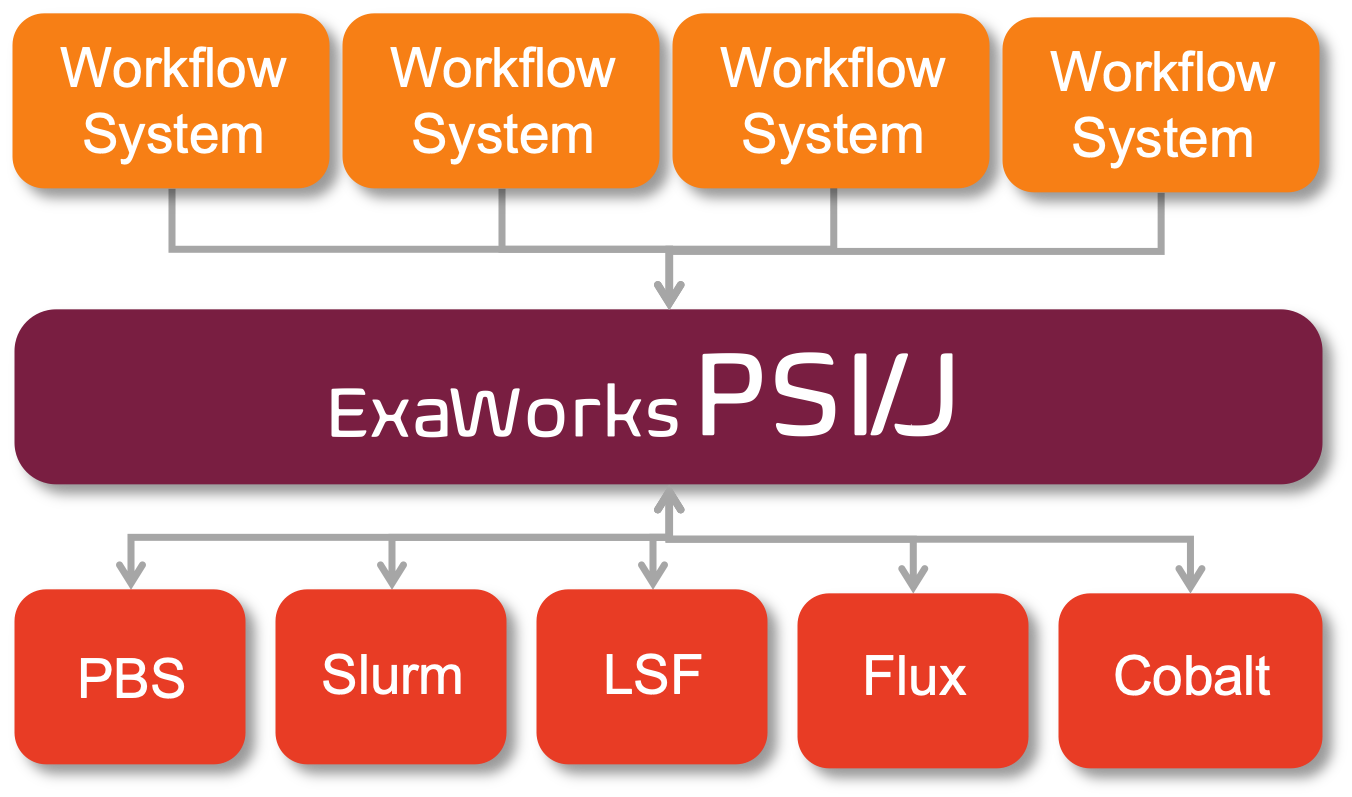

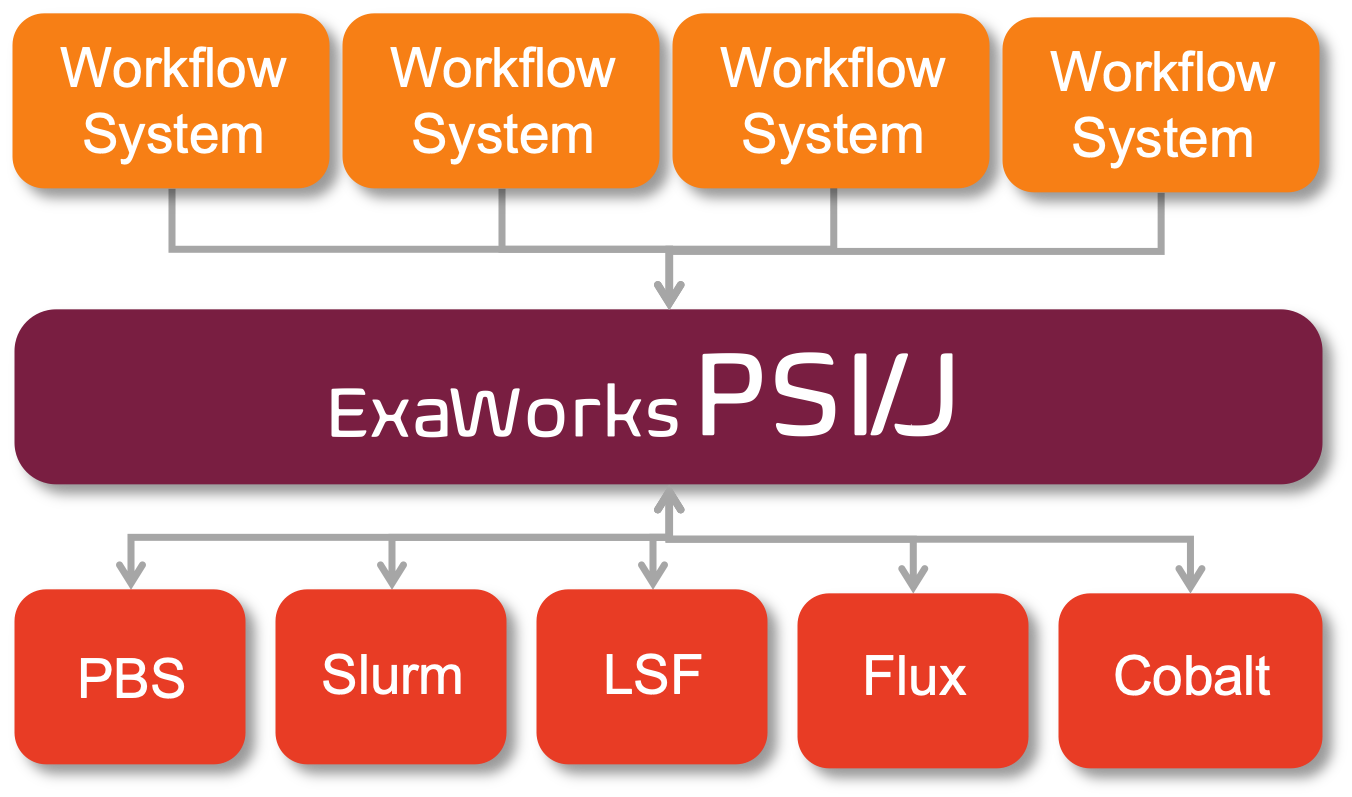

Portable Submission Interface for Jobs

A Python abstraction layer over cluster schedulers

Write Scheduler Agnostic HPC Applications

Use a unified API to run your HPC application virtually

anywhere. Tested on a wide variety of clusters,

PSI/J automatically

translates abstract job specifications into concrete

scripts and commands to send to the scheduler.

PSI/J runs entirely in user space

There is no need to wait for infrequent deployment cycles.

The HPC world can be rather dynamic and the ability to

quickly react to experimental changes in cluster

environments is essential.

Use built-in or community contributed plugins

It is virtually impossible for a single entity to provide

stable and tested adapters to all clusters and schedulers.

That is why PSI/J enables

and encourages community contributions to scheduler

adapters, testbeds, and specific cluster knowledge.

PSI/J has a rich HPC legacy

PSI/J was built by a team with decades of experience building workflow systems for large scale computing.

©2021- - The ExaWorks Project

PSI/J is funded by the U.S. Department of Energy

PSI/J is funded by the U.S. Department of Energy

PSI/J is part of the Exascale Computing Project

PSI/J is part of the Exascale Computing Project